vLLM 是一个被学术界和工业界广泛应用的开源大模型推理系统,它的目标是建立一个最高效易用的开源推理服务系统。

vLLM’s goal: build the fastest and easiest-to-use open-source LLM inference & serving engine.

2025年1月27日,vLLM 社区发布了 V1 版本,在保留 V0 版本的调用API的基础上,将核心代码重构成了一个简单、模块化、易改的代码库。用过 vLLM V0 的都知道,这是一个太庞大的系统,十万行代码,逻辑复杂,像调度器的调度逻辑能把人绕晕。而 V1 的代码非常简洁,将系统的执行逻辑进一步模块化成processorengine_core和output_processor。目前 V1 的代码量仅有五千行,虽然它在很多地方借用了 V0 的代码,但我估计把那些重要的代码加进来也就一万行。所以我认为 vLLM V1 是一个适合用于新手学习的教科书。这篇博客讲解了 V1 版本的核心代码,依据0.7.3发布版本。

V1 版本和 V0 版本的区别

如果你不熟悉 vLLM V0,可以先跳过这一部分。这一部分总结了几个比较重要的区别,我将在后文解释其中细节。

我觉得新版本最大的区别就是砍掉了 Prefill 和 Decode 的概念,并默认使用 chunked prefill。在 V0 中,默认情况下在同一时刻要么执行 Prefill 的请求,要么执行 Decode 的请求(这里的默认是指调度器的schedule_default()函数)。因此它需要三个队列来存储不同状态的请求(这也是 PagedAttention 论文里描述的行为):

waiting队列存储未经过 Prefill 的请求;running队列存储经过 Prefill ,正在 Decode 阶段的请求;swapped队列存储由于显存不够,KV cache 被换出到 CPU 内存上的请求,它们经过了 Prefill,但是优先级较低,所以被抢占,在内存中等待被执行 Decode。

有这三个队列在,调度逻辑就复杂了,比如用一个臭名昭著的_passed_delay()函数来决定何时让waiting队列的请求进入running队列,以充分利用 GPU 的 batch 并行计算能力。也要考虑如何处理被抢占请求等等。V0 版本的细节我不再赘述。

在 V1 中,没有 Prefill 和 Decode,从现在开始,请忘掉 Prefill 和 Decode 是什么。在 V1 中,只有num_tokens和num_computed_tokens。num_tokens指请求目前的长度,即输入长度加输出长度。num_computed_tokens指经过大模型计算,并已经存储过 KV cache 的token个数。它可以满足目前的所有需要:

- Prefill:

num_computed_tokens=0; - Decode:

num_computed_tokens=num_tokens-1,所以只有最后一个token需要被送进大模型作 Decode 生成; - Prefix caching: 让

num_computed_tokens等于命中 KV 缓存的长度。 - Jump decoding: 直接在请求后面增加希望解码的格式,并增加相应的

num_tokens长度。

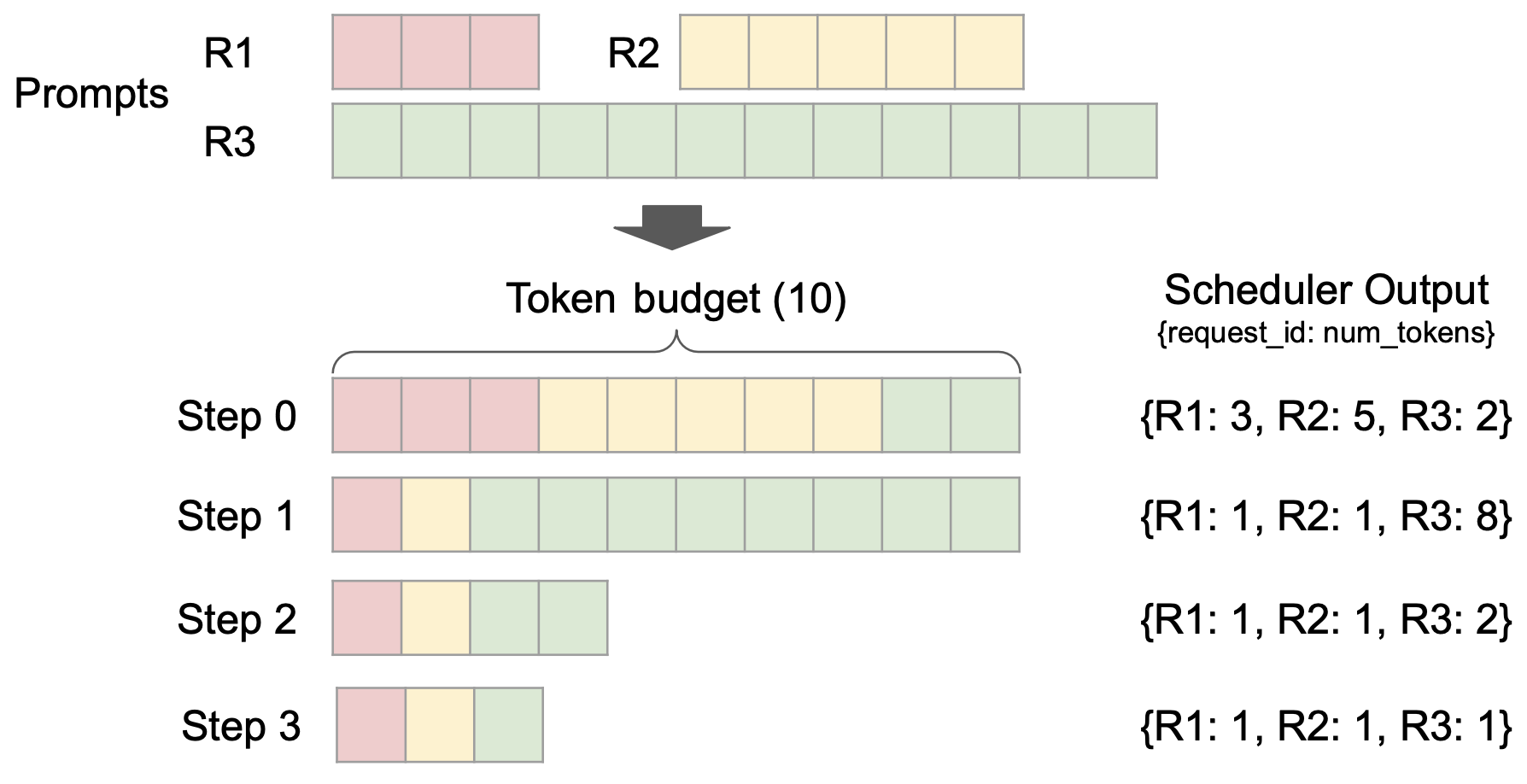

这样一改,可以直接砍掉swapped队列。在 V1 中,被抢占的请求是直接塞到waiting队列的头部,但它自己记录了自己算过多少token(num_computed_tokens),并知道它们的 KV cache 存在哪。这还保证了被抢占请求是比waiting队列中的请求优先级更高。总之,逻辑简化并且效率更高。细节留到后面再讲,这里先放一张图,它解释了为什么 V1 的chunked prefill利用 GPU 更充分。

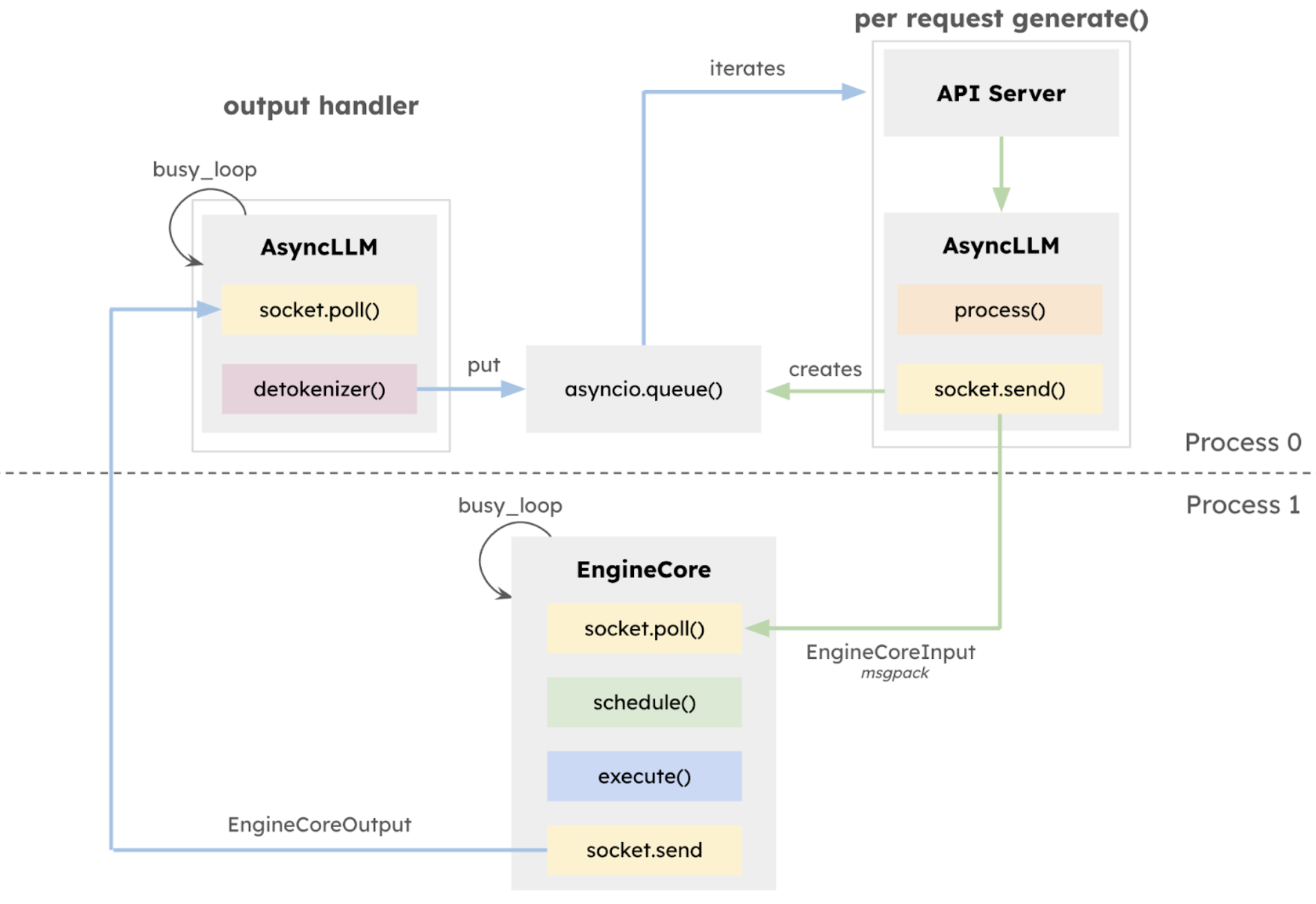

第二个大改动是开了两个进程分别处理。一个是 GPU 密集型任务如 Transformer 执行,另一个是 CPU 密集型任务如 tokenization, multimodal input processing 和 de-tokenization。两个进程同时执行,加快速度。

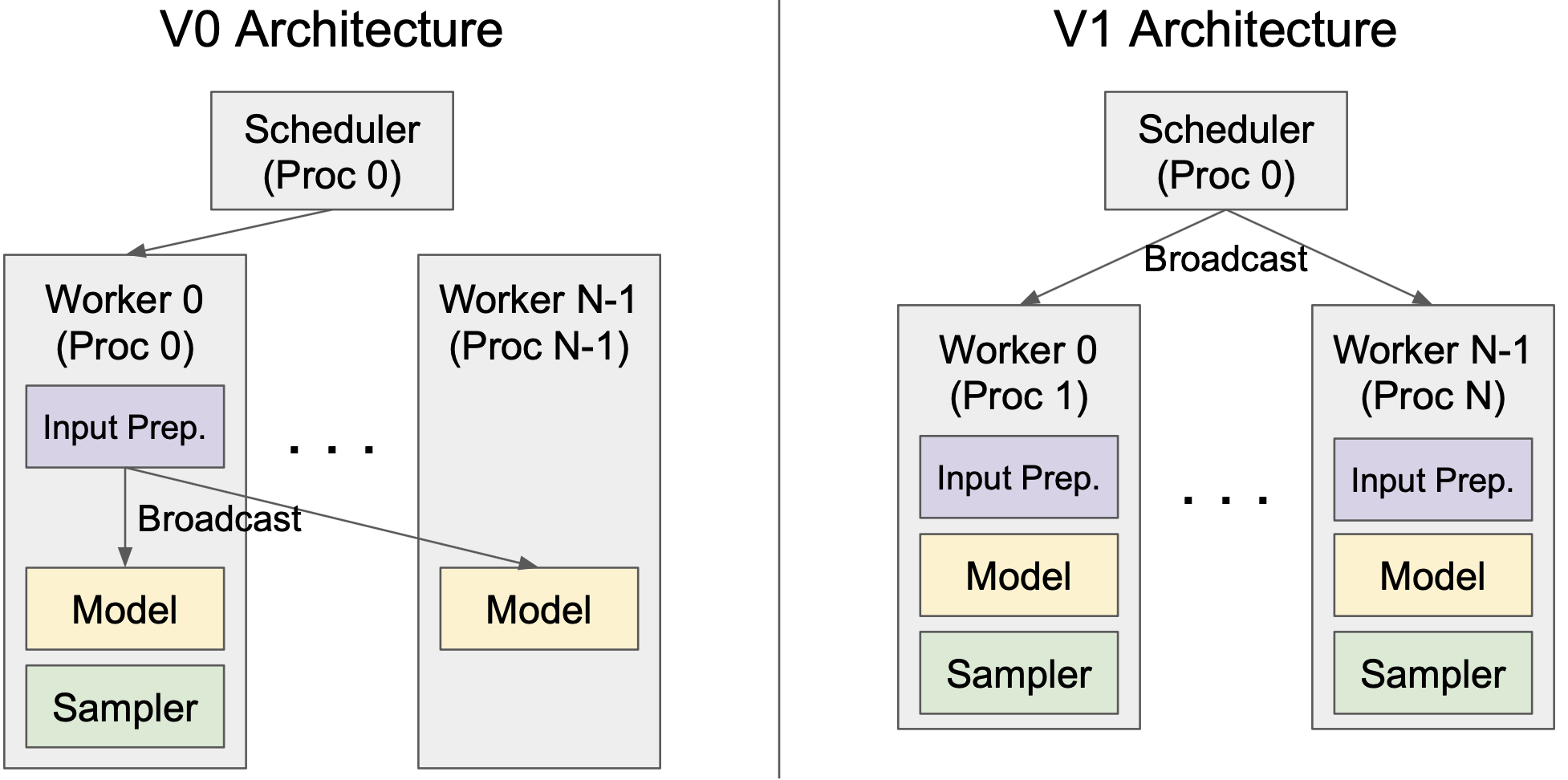

第三个,在张量并行中把输入处理也做并行化,实现了一个对称的并行结构。具体是在 EngineCore 进程中,将每一个 worker 用一个线程管理。

第四个,将 Attention 计算的部分从 CUDA 图中分离出来,使用 PyTorch 后端。这使得针对 Attention 进行优化的一些方法可以被应用进来,如Cascade Attention。还有一些其它的小更新,不再细讲了。

一、入口函数

本博客是一篇 vllm V1 的代码解读。我们注重这个推理系统的执行逻辑,对推理服务的初始化等细节先不讨论。我计划先从调用接口讲起,一层一层地往下解读。在后面的解读中,我会使用文字说明和代码相结合的形式:尽量用文字把代码逻辑说清楚,同时附上代码佐证。读者可以跳过冗长的代码只看文字,但读一遍代码后理解得会更深刻。为了精简,我抄在这里的代码都是与主线有关的函数实现,并不是全部代码。先看 vllm 官方的一个使用样例。

# examples/offline_inference/basic.py

from vllm import LLM, SamplingParams

# 提示词

prompts = ["Hello, my name is",

"The president of the United States is",

"The capital of France is",

"The future of AI is",]

# 采样参数

sampling_params = SamplingParams(temperature=0.8, top_p=0.95)

# 初始化大模型

llm = LLM(model="facebook/opt-125m")

# 生成

outputs = llm.generate(prompts, sampling_params)

# 打印

for output in outputs:

prompt = output.prompt

generated_text = output.outputs[0].text

print(f"Prompt: {prompt!r}, Generated text: {generated_text!r}")

llm = LLM(model="facebook/opt-125m")加载了推理系统的配置和模型参数等。这一部分不复杂,感兴趣的读者可以自行查阅代码。它有一个细节是会做一次 profiling 来看看显存上有多少空余的 KV cache block,具体在vllm/v1/worker/gpu_model_runner.py的GPUModelRunner.profile_run()函数实现。值得一提的是,另一个部署大模型的方法是在命令行中执行vllm serve Qwen/Qwen2.5-1.5B-Instruct,这是在线服务,异步推理模式。本篇博客只涉及基于LLMEngine的离线批处理服务,理解这个之后就容易理解异步模式了。

下一步是进入llm.generate()函数,开始推理,这是本篇博客的重点。目前的LLM类还是用了 V0 的代码,但可以看到get_engine_class()函数中,如果启用 V1,将会从 V1 文件夹中导入LLMEngine类。generate()函数的主要流程是先用_validate_and_add_requests()加入这些请求,再用_run_engine()实现批处理。

_validate_and_add_requests()先对输入的参数做一些检查,随后将每个请求用self.llm_engine.add_request()添加到LLMEngine中。_run_engine()主要就是循环,当 llm_engine 中还有未完成的请求时,循环调用self.llm_engine.step(),并且将结束的请求的输出保存下来。

总之,就是 add_request 一次,循环执行 step,每次 step 都会调用大模型做一次推理计算,直到所有请求结束生成。下面我们将进入 V1 版本的LLMEngine,研究这两个函数。

# vllm/entrypoints/llm.py

class LLM:

"""An LLM for generating texts from given prompts and sampling parameters.

This class includes a tokenizer, a language model (possibly distributed

across multiple GPUs), and GPU memory space allocated for intermediate

states (aka KV cache). Given a batch of prompts and sampling parameters,

this class generates texts from the model, using an intelligent batching

mechanism and efficient memory management.

Args:

model: The name or path of a HuggingFace Transformers model.

tokenizer: The name or path of a HuggingFace Transformers tokenizer.

tokenizer_mode: The tokenizer mode. "auto" will use the fast tokenizer

if available, and "slow" will always use the slow tokenizer.

skip_tokenizer_init: If true, skip initialization of tokenizer and

detokenizer. Expect valid prompt_token_ids and None for prompt

from the input.

trust_remote_code: Trust remote code (e.g., from HuggingFace) when

downloading the model and tokenizer.

allowed_local_media_path: Allowing API requests to read local images

or videos from directories specified by the server file system.

This is a security risk. Should only be enabled in trusted

environments.

tensor_parallel_size: The number of GPUs to use for distributed

execution with tensor parallelism.

dtype: The data type for the model weights and activations. Currently,

we support `float32`, `float16`, and `bfloat16`. If `auto`, we use

the `torch_dtype` attribute specified in the model config file.

However, if the `torch_dtype` in the config is `float32`, we will

use `float16` instead.

quantization: The method used to quantize the model weights. Currently,

we support "awq", "gptq", and "fp8" (experimental).

If None, we first check the `quantization_config` attribute in the

model config file. If that is None, we assume the model weights are

not quantized and use `dtype` to determine the data type of

the weights.

revision: The specific model version to use. It can be a branch name,

a tag name, or a commit id.

tokenizer_revision: The specific tokenizer version to use. It can be a

branch name, a tag name, or a commit id.

seed: The seed to initialize the random number generator for sampling.

gpu_memory_utilization: The ratio (between 0 and 1) of GPU memory to

reserve for the model weights, activations, and KV cache. Higher

values will increase the KV cache size and thus improve the model's

throughput. However, if the value is too high, it may cause out-of-

memory (OOM) errors.

swap_space: The size (GiB) of CPU memory per GPU to use as swap space.

This can be used for temporarily storing the states of the requests

when their `best_of` sampling parameters are larger than 1. If all

requests will have `best_of=1`, you can safely set this to 0.

Otherwise, too small values may cause out-of-memory (OOM) errors.

cpu_offload_gb: The size (GiB) of CPU memory to use for offloading

the model weights. This virtually increases the GPU memory space

you can use to hold the model weights, at the cost of CPU-GPU data

transfer for every forward pass.

enforce_eager: Whether to enforce eager execution. If True, we will

disable CUDA graph and always execute the model in eager mode.

If False, we will use CUDA graph and eager execution in hybrid.

max_seq_len_to_capture: Maximum sequence len covered by CUDA graphs.

When a sequence has context length larger than this, we fall back

to eager mode. Additionally for encoder-decoder models, if the

sequence length of the encoder input is larger than this, we fall

back to the eager mode.

disable_custom_all_reduce: See :class:`~vllm.config.ParallelConfig`

disable_async_output_proc: Disable async output processing.

This may result in lower performance.

hf_overrides: If a dictionary, contains arguments to be forwarded to the

HuggingFace config. If a callable, it is called to update the

HuggingFace config.

compilation_config: Either an integer or a dictionary. If it is an

integer, it is used as the level of compilation optimization. If it

is a dictionary, it can specify the full compilation configuration.

**kwargs: Arguments for :class:`~vllm.EngineArgs`. (See

:ref:`engine-args`)

Note:

This class is intended to be used for offline inference. For online

serving, use the :class:`~vllm.AsyncLLMEngine` class instead.

"""

@deprecate_args(

start_index=2, # Ignore self and model

is_deprecated=lambda: LLM.DEPRECATE_INIT_POSARGS,

additional_message=(

"All positional arguments other than `model` will be "

"replaced with keyword arguments in an upcoming version."),

)

def __init__(

self,

model: str,

tokenizer: Optional[str] = None,

tokenizer_mode: str = "auto",

skip_tokenizer_init: bool = False,

trust_remote_code: bool = False,

allowed_local_media_path: str = "",

tensor_parallel_size: int = 1,

dtype: str = "auto",

quantization: Optional[str] = None,

revision: Optional[str] = None,

tokenizer_revision: Optional[str] = None,

seed: int = 0,

gpu_memory_utilization: float = 0.9,

swap_space: float = 4,

cpu_offload_gb: float = 0,

enforce_eager: Optional[bool] = None,

max_seq_len_to_capture: int = 8192,

disable_custom_all_reduce: bool = False,

disable_async_output_proc: bool = False,

hf_overrides: Optional[HfOverrides] = None,

mm_processor_kwargs: Optional[dict[str, Any]] = None,

# After positional args are removed, move this right below `model`

task: TaskOption = "auto",

override_pooler_config: Optional[PoolerConfig] = None,

compilation_config: Optional[Union[int, dict[str, Any]]] = None,

**kwargs,

) -> None:

'''

LLM constructor.

Note: if enforce_eager is unset (enforce_eager is None)

it defaults to False.

'''

if "disable_log_stats" not in kwargs:

kwargs["disable_log_stats"] = True

if "worker_cls" in kwargs:

worker_cls = kwargs["worker_cls"]

# if the worker_cls is not qualified string name,

# we serialize it using cloudpickle to avoid pickling issues

if isinstance(worker_cls, type):

kwargs["worker_cls"] = cloudpickle.dumps(worker_cls)

if compilation_config is not None:

if isinstance(compilation_config, (int, dict)):

compilation_config_instance = CompilationConfig.from_cli(

str(compilation_config))

else:

compilation_config_instance = compilation_config

else:

compilation_config_instance = None

engine_args = EngineArgs(

model=model,

task=task,

tokenizer=tokenizer,

tokenizer_mode=tokenizer_mode,

skip_tokenizer_init=skip_tokenizer_init,

trust_remote_code=trust_remote_code,

allowed_local_media_path=allowed_local_media_path,

tensor_parallel_size=tensor_parallel_size,

dtype=dtype,

quantization=quantization,

revision=revision,

tokenizer_revision=tokenizer_revision,

seed=seed,

gpu_memory_utilization=gpu_memory_utilization,

swap_space=swap_space,

cpu_offload_gb=cpu_offload_gb,

enforce_eager=enforce_eager,

max_seq_len_to_capture=max_seq_len_to_capture,

disable_custom_all_reduce=disable_custom_all_reduce,

disable_async_output_proc=disable_async_output_proc,

hf_overrides=hf_overrides,

mm_processor_kwargs=mm_processor_kwargs,

override_pooler_config=override_pooler_config,

compilation_config=compilation_config_instance,

**kwargs,

)

# Logic to switch between engines is done at runtime instead of import

# to avoid import order issues

self.engine_class = self.get_engine_class()

self.llm_engine = self.engine_class.from_engine_args(

engine_args, usage_context=UsageContext.LLM_CLASS)

self.request_counter = Counter()

self.default_sampling_params: Union[dict[str, Any], None] = None

@staticmethod

def get_engine_class() -> type[LLMEngine]:

if envs.VLLM_USE_V1:

# Lazy import: the v1 package isn't distributed

from vllm.v1.engine.llm_engine import LLMEngine as V1LLMEngine

return V1LLMEngine # type: ignore

return LLMEngine

@deprecate_kwargs(

"prompt_token_ids",

is_deprecated=lambda: LLM.DEPRECATE_LEGACY,

additional_message="Please use the 'prompts' parameter instead.",

)

def generate(

self,

prompts: Union[Union[PromptType, Sequence[PromptType]],

Optional[Union[str, list[str]]]] = None,

sampling_params: Optional[Union[SamplingParams,

Sequence[SamplingParams]]] = None,

prompt_token_ids: Optional[Union[list[int], list[list[int]]]] = None,

use_tqdm: bool = True,

lora_request: Optional[Union[list[LoRARequest], LoRARequest]] = None,

prompt_adapter_request: Optional[PromptAdapterRequest] = None,

guided_options_request: Optional[Union[LLMGuidedOptions,

GuidedDecodingRequest]] = None,

priority: Optional[list[int]] = None,

) -> list[RequestOutput]:

"""Generates the completions for the input prompts.

This class automatically batches the given prompts, considering

the memory constraint. For the best performance, put all of your prompts

into a single list and pass it to this method.

Args:

prompts: The prompts to the LLM. You may pass a sequence of prompts

for batch inference. See :class:`~vllm.inputs.PromptType`

for more details about the format of each prompts.

sampling_params: The sampling parameters for text generation. If

None, we use the default sampling parameters.

When it is a single value, it is applied to every prompt.

When it is a list, the list must have the same length as the

prompts and it is paired one by one with the prompt.

use_tqdm: Whether to use tqdm to display the progress bar.

lora_request: LoRA request to use for generation, if any.

prompt_adapter_request: Prompt Adapter request to use for

generation, if any.

priority: The priority of the requests, if any.

Only applicable when priority scheduling policy is enabled.

Returns:

A list of ``RequestOutput`` objects containing the

generated completions in the same order as the input prompts.

Note:

Using ``prompts`` and ``prompt_token_ids`` as keyword parameters is

considered legacy and may be deprecated in the future. You should

instead pass them via the ``inputs`` parameter.

"""

runner_type = self.llm_engine.model_config.runner_type

if runner_type not in ["generate", "transcription"]:

messages = [

"LLM.generate() is only supported for (conditional) generation "

"models (XForCausalLM, XForConditionalGeneration).",

]

supported_runner_types = self.llm_engine.model_config \

.supported_runner_types

if "generate" in supported_runner_types:

messages.append(

"Your model supports the 'generate' runner, but is "

f"currently initialized for the '{runner_type}' runner. "

"Please initialize vLLM using `--task generate`.")

raise ValueError(" ".join(messages))

if prompt_token_ids is not None:

parsed_prompts = self._convert_v1_inputs(

prompts=cast(Optional[Union[str, list[str]]], prompts),

prompt_token_ids=prompt_token_ids,

)

else:

parsed_prompts = cast(Union[PromptType, Sequence[PromptType]],

prompts)

if isinstance(guided_options_request, dict):

if len(guided_options_request) > 1:

raise ValueError(

"You can only use one guided decoding but multiple is "

f"specified: {guided_options_request}")

guided_options_request = GuidedDecodingRequest(

**guided_options_request)

if sampling_params is None:

# Use default sampling params.

sampling_params = self.get_default_sampling_params()

self._validate_and_add_requests(

prompts=parsed_prompts,

params=sampling_params,

lora_request=lora_request,

prompt_adapter_request=prompt_adapter_request,

guided_options=guided_options_request,

priority=priority)

outputs = self._run_engine(use_tqdm=use_tqdm)

return self.engine_class.validate_outputs(outputs, RequestOutput)

def _validate_and_add_requests(

self,

prompts: Union[PromptType, Sequence[PromptType]],

params: Union[SamplingParams, Sequence[SamplingParams], PoolingParams,

Sequence[PoolingParams]],

lora_request: Optional[Union[Sequence[LoRARequest], LoRARequest]],

prompt_adapter_request: Optional[PromptAdapterRequest],

guided_options: Optional[GuidedDecodingRequest] = None,

priority: Optional[list[int]] = None,

) -> None:

if guided_options is not None:

warnings.warn(

"guided_options_request is deprecated, use "

"SamplingParams.guided_decoding instead",

DeprecationWarning,

stacklevel=2,

)

if isinstance(prompts, (str, dict)):

# Convert a single prompt to a list.

prompts = [prompts]

num_requests = len(prompts)

if isinstance(params, list) and len(params) != num_requests:

raise ValueError("The lengths of prompts and params "

"must be the same.")

if isinstance(lora_request,

list) and len(lora_request) != num_requests:

raise ValueError("The lengths of prompts and lora_request "

"must be the same.")

for sp in params if isinstance(params, list) else (params, ):

if isinstance(sp, SamplingParams):

self._add_guided_params(sp, guided_options)

# We only care about the final output

sp.output_kind = RequestOutputKind.FINAL_ONLY

# Add requests to the engine.

for i, prompt in enumerate(prompts):

self._add_request(

prompt,

params[i] if isinstance(params, Sequence) else params,

lora_request=lora_request[i] if isinstance(

lora_request, Sequence) else lora_request,

prompt_adapter_request=prompt_adapter_request,

priority=priority[i] if priority else 0,

)

def _add_request(

self,

prompt: PromptType,

params: Union[SamplingParams, PoolingParams],

lora_request: Optional[LoRARequest] = None,

prompt_adapter_request: Optional[PromptAdapterRequest] = None,

priority: int = 0,

) -> None:

request_id = str(next(self.request_counter))

self.llm_engine.add_request(

request_id,

prompt,

params,

lora_request=lora_request,

prompt_adapter_request=prompt_adapter_request,

priority=priority,

)

def _add_guided_params(

self,

params: SamplingParams,

guided_options: Optional[GuidedDecodingRequest] = None):

if guided_options is None:

return params

if params.guided_decoding is not None:

raise ValueError("Cannot set both guided_options_request and "

"params.guided_decoding.")

params.guided_decoding = GuidedDecodingParams(

json=guided_options.guided_json,

regex=guided_options.guided_regex,

choice=guided_options.guided_choice,

grammar=guided_options.guided_grammar,

json_object=guided_options.guided_json_object,

backend=guided_options.guided_decoding_backend,

whitespace_pattern=guided_options.guided_whitespace_pattern)

return params

def _run_engine(

self, *, use_tqdm: bool

) -> list[Union[RequestOutput, PoolingRequestOutput]]:

# Initialize tqdm.

if use_tqdm:

num_requests = self.llm_engine.get_num_unfinished_requests()

pbar = tqdm(

total=num_requests,

desc="Processed prompts",

dynamic_ncols=True,

postfix=(f"est. speed input: {0:.2f} toks/s, "

f"output: {0:.2f} toks/s"),

)

# Run the engine.

outputs: list[Union[RequestOutput, PoolingRequestOutput]] = []

total_in_toks = 0

total_out_toks = 0

while self.llm_engine.has_unfinished_requests():

step_outputs = self.llm_engine.step()

for output in step_outputs:

if output.finished:

outputs.append(output)

if use_tqdm:

if isinstance(output, RequestOutput):

# Calculate tokens only for RequestOutput

assert output.prompt_token_ids is not None

total_in_toks += len(output.prompt_token_ids)

in_spd = total_in_toks / pbar.format_dict["elapsed"]

total_out_toks += sum(

len(stp.token_ids) for stp in output.outputs)

out_spd = (total_out_toks /

pbar.format_dict["elapsed"])

pbar.postfix = (

f"est. speed input: {in_spd:.2f} toks/s, "

f"output: {out_spd:.2f} toks/s")

pbar.update(1)

if use_tqdm:

pbar.close()

# Sort the outputs by request ID.

# This is necessary because some requests may be finished earlier than

# its previous requests.

return sorted(outputs, key=lambda x: int(x.request_id))

二、LLMEngine 总览

LLMEngine有三个核心组件:ProcessorEngineCoreClient和OutputProcessor。在引擎启动(初始化)时,EngineCoreClient会创建一个新进程EngineCore,执行实际的模型推理,而请求的预处理和结果处理在原程的Processor和OutputProcessor中完成,这样 CPU 操作和 GPU 操作可以并行完成。EngineCore又会创建一个或多个线程来做模型的张量并行。每个线程是一个Worker,管理一个本地或远程的 GPU 设备。下图说明了一个请求进入到LLMEngine以后,是怎么被这些模块处理的。

请求通过self.llm_engine.add_request()进入LLMEngine后,先被Processor处理,它复用了 V0 版本的InputPreprocessor将字符串转换成 token,自己再做一些模型特定的预处理(只有少部分模型有这个处理过程)。然后请求的状态(生成了几个 token 以及一些测量结果)会被存在OutputProcessor中,这样LLMEngine就知道自己还有几个请求没完成。llm_engine.add_request()的最后一步是把请求交给EngineCoreClient,它通过自己的input_socket把请求发给EngineCore做真正的模型推理。然后在每次llm_engine.step()时,它查看output_socket中有没有结果返回,如果有,就交给OutputProcessor再返回给用户。请注意这里大模型每迭代一步都会有一个中间结果出来,即每输出一个 token,LLMEngine都会更新一下OutputProcessor中请求的状态并把新 token 输出给用户。

在另一个进程中,EngineCore循环监听input_queue有没有新请求到来,有的话立刻加入到调度器的等待队列中。只要调度器中有未完成的请求,调度器就会调度本步推理要执行的请求及其 token 数。MultiprocExecutor让一个或多个Worker开始工作,最后将推理结果放在output_queue中发给EngineCoreClient。与此同时,循环回到调度器、推理,只要请求没结束(即输出<eos>(End of Sentence)等停止标志)。

# vllm/v1/engine/llm_engine.py

class LLMEngine:

"""Legacy LLMEngine for backwards compatibility."""

def __init__(

self,

vllm_config: VllmConfig,

executor_class: Type[Executor],

log_stats: bool,

usage_context: UsageContext = UsageContext.ENGINE_CONTEXT,

stat_loggers: Optional[Dict[str, StatLoggerBase]] = None,

input_registry: InputRegistry = INPUT_REGISTRY,

mm_registry: MultiModalRegistry = MULTIMODAL_REGISTRY,

use_cached_outputs: bool = False,

multiprocess_mode: bool = False,

) -> None:

self.model_config = vllm_config.model_config

self.cache_config = vllm_config.cache_config

# Tokenizer (+ ensure liveness if running in another process).

self.tokenizer = init_tokenizer_from_configs(

model_config=vllm_config.model_config,

scheduler_config=vllm_config.scheduler_config,

parallel_config=vllm_config.parallel_config,

lora_config=vllm_config.lora_config)

self.tokenizer.ping()

# Processor (convert Inputs --> EngineCoreRequests)

self.processor = Processor(model_config=vllm_config.model_config,

cache_config=vllm_config.cache_config,

lora_config=vllm_config.lora_config,

tokenizer=self.tokenizer,

input_registry=input_registry,

mm_registry=mm_registry)

# OutputProcessor (convert EngineCoreOutputs --> RequestOutput).

self.output_processor = OutputProcessor(self.tokenizer,

log_stats=False)

# EngineCore (gets EngineCoreRequests and gives EngineCoreOutputs)

self.engine_core = EngineCoreClient.make_client(

multiprocess_mode=multiprocess_mode,

asyncio_mode=False,

vllm_config=vllm_config,

executor_class=executor_class,

log_stats=False, # FIXME: implement

)

def add_request(

self,

request_id: str,

prompt: PromptType,

params: Union[SamplingParams, PoolingParams],

arrival_time: Optional[float] = None,

lora_request: Optional[LoRARequest] = None,

trace_headers: Optional[Mapping[str, str]] = None,

prompt_adapter_request: Optional[PromptAdapterRequest] = None,

priority: int = 0,

) -> None:

# 1) Process raw inputs into the request.

request = self.processor.process_inputs(request_id, prompt, params,

arrival_time, lora_request,

trace_headers,

prompt_adapter_request,

priority)

# 2) Make a new RequestState and queue.

self.output_processor.add_request(request)

# 3) Add the request to EngineCore.

self.engine_core.add_request(request)

def step(self) -> List[RequestOutput]:

# 1) Get EngineCoreOutput from the EngineCore.

outputs = self.engine_core.get_output()

# 2) Process EngineCoreOutputs.

processed_outputs = self.output_processor.process_outputs(

outputs.outputs)

# 3) Abort any reqs that finished due to stop strings.

self.engine_core.abort_requests(processed_outputs.reqs_to_abort)

return processed_outputs.request_outputs

从这份代码中可以看出,V1 版本将所有功能下沉,使得代码精简易懂。

三、Processor

在Processor.process_inputs()函数中,主要执行了preprocessed_inputs = self.input_preprocessor.preprocess(),它与 V0 版本相同。注释写得也很详细,先 tokenize 文本,再补全多模态 token,再应用 prompt adapter。有一行可以先不看:processed_inputs = self.input_processor(preprocessed_inputs),大多数模型在这一步直接跳过。它需要在模型文件中 register processor 才会生效,读者可以在遇到特殊的模型时再来研究这行代码。

# vllm/v1/engine/processor.py

class Processor:

def __init__(

self,

model_config: ModelConfig,

cache_config: CacheConfig,

lora_config: Optional[LoRAConfig],

tokenizer: BaseTokenizerGroup,

input_registry: InputRegistry = INPUT_REGISTRY,

mm_registry: MultiModalRegistry = MULTIMODAL_REGISTRY,

):

self.model_config = model_config

self.cache_config = cache_config

self.lora_config = lora_config

self.tokenizer = tokenizer

self.generation_config_fields = model_config.try_get_generation_config(

)

self.input_preprocessor = InputPreprocessor(model_config,

self.tokenizer,

mm_registry)

self.input_processor = input_registry.create_input_processor(

model_config)

# Multi-modal (huggingface) input mapper

self.mm_input_cache_client = MMInputCacheClient(model_config)

# Multi-modal hasher (for images)

self.use_hash = (not model_config.disable_mm_preprocessor_cache) or \

cache_config.enable_prefix_caching

def process_inputs(

self,

request_id: str,

prompt: PromptType,

params: Union[SamplingParams, PoolingParams],

arrival_time: Optional[float] = None,

lora_request: Optional[LoRARequest] = None,

trace_headers: Optional[Mapping[str, str]] = None,

prompt_adapter_request: Optional[PromptAdapterRequest] = None,

priority: int = 0,

) -> EngineCoreRequest:

# TODO(woosuk): Support pooling models.

# TODO(woosuk): Support encoder-decoder models.

self._validate_logprobs(params)

self._validate_lora(lora_request)

if arrival_time is None:

arrival_time = time.time()

assert priority == 0, "vLLM V1 does not support priority at the moment."

assert trace_headers is None, "vLLM V1 does not support tracing yet."

# Process inputs, which includes:

# 1. Tokenize text prompt, with LoRA request if one exists.

# 2. For multimodal models with a merged preprocessor, preprocess

# multimodal data and expand prompt token ids accordingly.

# 3. Apply prompt adapter to prompt token ids if one exists.

preprocessed_inputs = self.input_preprocessor.preprocess(

prompt,

request_id=request_id,

lora_request=lora_request,

prompt_adapter_request=prompt_adapter_request,

)

eos_token_id = self.input_preprocessor.get_eos_token_id(lora_request)

# Process prompt and prompt token ids.

# Only applicable to multimodal models with legacy input processor.

processed_inputs = self.input_processor(preprocessed_inputs)

self._validate_model_inputs(processed_inputs)

if is_encoder_decoder_inputs(processed_inputs):

decoder_inputs = SingletonInputsAdapter(

processed_inputs["decoder"])

encoder_inputs = SingletonInputsAdapter(

processed_inputs["encoder"])

else:

decoder_inputs = SingletonInputsAdapter(processed_inputs)

encoder_inputs = None

# TODO: Impl encoder-decoder

if encoder_inputs is not None:

raise NotImplementedError

assert isinstance(params, SamplingParams)

# TODO: can we avoid cloning here in multiproc case

sampling_params = params.clone()

sampling_params.update_from_generation_config(

self.generation_config_fields, eos_token_id)

# Multimodal related.

# Compute MM hashes (if enabled)

mm_hashes = None

if self.use_hash:

# Use mm_hashes from processed inputs if the model has merged

# input processor.

if decoder_inputs.multi_modal_hashes:

mm_hashes = decoder_inputs.multi_modal_hashes

# Fallback to using MultiModalHasher directly.

else:

mm_hashes = MultiModalHasher.hash_prompt_mm_data(prompt)

# For merged preprocessor, mm_data is already mm_inputs

precomputed_mm_inputs: Optional[list[MultiModalKwargs]] = None

decoder_mm_data = decoder_inputs.multi_modal_data

if isinstance(decoder_mm_data, MultiModalKwargs):

# The output of merged multi-modal processor (`decoder_mm_data`)

# contains the kwargs for all items from all modalities.

# This code separates them so that there is one set of kwargs

# per item per modality.

precomputed_mm_inputs = [

MultiModalKwargs.from_items([item])

for modality in decoder_mm_data.modalities

for item in decoder_mm_data.get_items(modality)

]

mm_positions = decoder_inputs.multi_modal_placeholders

# Last-mile processing of multimodal metadata and inputs.

if mm_positions:

# Merge and flatten multimodal placeholders, hashes and inputs

# from dictionaries to lists, and sort them by each item's position

# in the input sequence.

# NOTE: interleaved modalities are not supported.

(

sorted_modalities,

sorted_mm_positions,

sorted_mm_hashes,

) = merge_and_sort_multimodal_metadata(

mm_positions,

mm_hashes,

)

# NOTE: Sort multimodal inputs/kwargs ONLY IF there are multiple

# modalities involved AND the model supports merged input processor.

if len(sorted_modalities) > 1 and precomputed_mm_inputs:

modality_order_dict = {

modality: order

for order, modality in enumerate(sorted_modalities)

}

# Sanity check to make sure each multimodal input has only one

# modality key.

for mm_input in precomputed_mm_inputs:

assert len(mm_input.modalities) == 1

# Sort MultiModalKwags to match sorted_mm_positions

precomputed_mm_inputs = sorted(

precomputed_mm_inputs,

key=lambda mm_input: modality_order_dict[list(

mm_input.modalities)[0]])

# Apply mm input cache update and legacy input mapper if one exists.

sorted_mm_inputs = self.mm_input_cache_client.process_inputs(

mm_data=decoder_mm_data,

mm_hashes=sorted_mm_hashes,

mm_processor_kwargs=decoder_inputs.mm_processor_kwargs,

precomputed_mm_inputs=precomputed_mm_inputs,

)

else:

sorted_mm_inputs = None

sorted_mm_hashes = None

sorted_mm_positions = None

return EngineCoreRequest(

request_id=request_id,

prompt=decoder_inputs.prompt,

prompt_token_ids=decoder_inputs.prompt_token_ids,

mm_inputs=sorted_mm_inputs,

mm_hashes=sorted_mm_hashes,

mm_placeholders=sorted_mm_positions,

sampling_params=sampling_params,

eos_token_id=eos_token_id,

arrival_time=arrival_time,

lora_request=lora_request,

)

四、EngineCore

接下来,进入了最核心的EngingCore,我们先看看原进程的EngineCoreClient如何管理EngineCore。

V0 版本使用的是一个子类InprocClient,即没有区分两个进程,在一个进程里执行,这个实现就是直接调用EngineCore的add_request()step()等等即可。V1 版本默认使用MPClient,实现起来就稍微复杂一点了。MPClient使用pyzmq与新进程通信。pyzmq是 ZeroMQ 库的 Python 绑定,用于在分布式系统中进行消息传递。MPClient会在收到新进程传来的signal.SIGUSR1时终止所有子进程,收到信号说明子进程出现了一些问题。从下面的代码中可以看出,MPClient是通过self._send_input()来调用EngineCore的。

# vllm/v1/engine/core_client.py

class MPClient(EngineCoreClient):

"""

MPClient: base client for multi-proc EngineCore.

EngineCore runs in a background process busy loop, getting

new EngineCoreRequests and returning EngineCoreOutputs

* pushes EngineCoreRequests via input_socket

* pulls EngineCoreOutputs via output_socket

* AsyncMPClient subclass for AsyncLLM usage

* SyncMPClient subclass for LLM usage

"""

def __init__(

self,

asyncio_mode: bool,

vllm_config: VllmConfig,

executor_class: Type[Executor],

log_stats: bool,

):

# The child processes will send SIGUSR1 when unrecoverable

# errors happen. We kill the process tree here so that the

# stack trace is very evident.

# TODO(rob): rather than killing the main process, we should

# figure out how to raise an AsyncEngineDeadError and

# handle at the API server level so we can return a better

# error code to the clients calling VLLM.

def sigusr1_handler(signum, frame):

logger.fatal("Got fatal signal from worker processes, shutting "

"down. See stack trace above for root cause issue.")

kill_process_tree(os.getpid())

signal.signal(signal.SIGUSR1, sigusr1_handler)

# Serialization setup.

self.encoder = MsgpackEncoder()

self.decoder = MsgpackDecoder(EngineCoreOutputs)

# ZMQ setup.

self.ctx = (

zmq.asyncio.Context() # type: ignore[attr-defined]

if asyncio_mode else zmq.Context()) # type: ignore[attr-defined]

# Note(rob): shutdown function cannot be a bound method,

# else the gc cannot collect the object.

self._finalizer = weakref.finalize(self, lambda x: x.destroy(linger=0),

self.ctx)

# Paths and sockets for IPC.

output_path = get_open_zmq_ipc_path()

input_path = get_open_zmq_ipc_path()

self.output_socket = make_zmq_socket(self.ctx, output_path,

zmq.constants.PULL)

self.input_socket = make_zmq_socket(self.ctx, input_path,

zmq.constants.PUSH)

# Start EngineCore in background process.

self.proc_handle = BackgroundProcHandle(

input_path=input_path,

output_path=output_path,

process_name="EngineCore",

target_fn=EngineCoreProc.run_engine_core,

process_kwargs={

"vllm_config": vllm_config,

"executor_class": executor_class,

"log_stats": log_stats,

})

self.utility_results: Dict[int, AnyFuture] = {}

def shutdown(self):

"""Clean up background resources."""

if hasattr(self, "proc_handle"):

self.proc_handle.shutdown()

self._finalizer()

class SyncMPClient(MPClient):

"""Synchronous client for multi-proc EngineCore."""

def __init__(self, vllm_config: VllmConfig, executor_class: Type[Executor],

log_stats: bool):

super().__init__(

asyncio_mode=False,

vllm_config=vllm_config,

executor_class=executor_class,

log_stats=log_stats,

)

self.outputs_queue: queue.Queue[EngineCoreOutputs] = queue.Queue()

# Ensure that the outputs socket processing thread does not have

# a ref to the client which prevents gc.

output_socket = self.output_socket

decoder = self.decoder

utility_results = self.utility_results

outputs_queue = self.outputs_queue

def process_outputs_socket():

try:

while True:

(frame, ) = output_socket.recv_multipart(copy=False)

outputs = decoder.decode(frame.buffer)

if outputs.utility_output:

_process_utility_output(outputs.utility_output,

utility_results)

else:

outputs_queue.put_nowait(outputs)

except zmq.error.ContextTerminated:

# Expected when the class is GC'd / during process termination.

pass

# Process outputs from engine in separate thread.

Thread(target=process_outputs_socket, daemon=True).start()

def get_output(self) -> EngineCoreOutputs:

return self.outputs_queue.get()

def _send_input(self, request_type: EngineCoreRequestType,

request: Any) -> None:

# (RequestType, SerializedRequest)

msg = (request_type.value, self.encoder.encode(request))

self.input_socket.send_multipart(msg, copy=False)

def add_request(self, request: EngineCoreRequest) -> None:

# NOTE: text prompt is not needed in the core engine as it has been

# tokenized.

request.prompt = None

self._send_input(EngineCoreRequestType.ADD, request)

def abort_requests(self, request_ids: List[str]) -> None:

if len(request_ids) > 0:

self._send_input(EngineCoreRequestType.ABORT, request_ids)

class AsyncMPClient(MPClient):

"""Asyncio-compatible client for multi-proc EngineCore."""

def __init__(self, vllm_config: VllmConfig, executor_class: Type[Executor],

log_stats: bool):

super().__init__(

asyncio_mode=True,

vllm_config=vllm_config,

executor_class=executor_class,

log_stats=log_stats,

)

self.outputs_queue: Optional[asyncio.Queue[EngineCoreOutputs]] = None

self.queue_task: Optional[asyncio.Task] = None

async def _start_output_queue_task(self):

# Perform IO in separate task to parallelize as much as possible.

# Avoid task having direct reference back to the client.

self.outputs_queue = asyncio.Queue()

output_socket = self.output_socket

decoder = self.decoder

utility_results = self.utility_results

outputs_queue = self.outputs_queue

async def process_outputs_socket():

while True:

(frame, ) = await output_socket.recv_multipart(copy=False)

outputs: EngineCoreOutputs = decoder.decode(frame.buffer)

if outputs.utility_output:

_process_utility_output(outputs.utility_output,

utility_results)

else:

outputs_queue.put_nowait(outputs)

self.queue_task = asyncio.create_task(process_outputs_socket())

async def get_output_async(self) -> EngineCoreOutputs:

if self.outputs_queue is None:

await self._start_output_queue_task()

assert self.outputs_queue is not None

return await self.outputs_queue.get()

async def _send_input(self, request_type: EngineCoreRequestType,

request: Any) -> None:

msg = (request_type.value, self.encoder.encode(request))

await self.input_socket.send_multipart(msg, copy=False)

if self.outputs_queue is None:

await self._start_output_queue_task()

async def add_request_async(self, request: EngineCoreRequest) -> None:

# NOTE: text prompt is not needed in the core engine as it has been

# tokenized.

request.prompt = None

await self._send_input(EngineCoreRequestType.ADD, request)

async def abort_requests_async(self, request_ids: List[str]) -> None:

if len(request_ids) > 0:

await self._send_input(EngineCoreRequestType.ABORT, request_ids)

# vllm/v1/utils.py

class BackgroundProcHandle:

"""

Utility class to handle creation, readiness, and shutdown

of background processes used by the AsyncLLM and LLMEngine.

"""

def __init__(

self,

input_path: str,

output_path: str,

process_name: str,

target_fn: Callable,

process_kwargs: Dict[Any, Any],

):

context = get_mp_context()

reader, writer = context.Pipe(duplex=False)

assert ("ready_pipe" not in process_kwargs

and "input_path" not in process_kwargs

and "output_path" not in process_kwargs)

process_kwargs["ready_pipe"] = writer

process_kwargs["input_path"] = input_path

process_kwargs["output_path"] = output_path

# Run busy loop in background process.

self.proc = context.Process(target=target_fn, kwargs=process_kwargs)

self._finalizer = weakref.finalize(self, shutdown, self.proc,

input_path, output_path)

self.proc.start()

# Wait for startup.

if reader.recv()["status"] != "READY":

raise RuntimeError(f"{process_name} initialization failed. "

"See root cause above.")

def shutdown(self):

self._finalizer()

具体而言,在MPClient的初始化中,使用BackgroundProcHandle(..., target_fn=EngineCoreProc.run_engine_core, ...)开启新进程。BackgroundProcHandle的任务是保证这个进程成功启动,并在结束时关掉它。然后在EngineCoreProc.run_engine_core()函数中,它会实例化一个EngineCoreProc对象,并开始运行engine_core.run_busy_loop()。EngineCoreProc的初始化会创建两个守护线程分别用于监听MPClient的input_socket和发送消息给output_socket,还会告诉BackgroudProcHandle启动成功(ready_pipe.send({"status": "READY"}))。

在run_busy_loop()中,EngineCore会首先检查input_queue中有没有MPClient的指令(比如来了一个新的用户请求),如果有立即执行,然后执行step()推理、输出一步推理结果。如果所有请求都处理完了,它会每隔POLLING_TIMEOUT_S=2.5秒去检查一下input_queue。在step()函数中,先用调度器调度一下本次推理哪些请求,再让model_executor执行这一步,最后更新调度器中的请求状态并把结果放入output_queue中。model_executor会调用底层的大模型计算,即nn.Module.forward()函数,这里封装得很好,我不再往下拆解了。调度器的行为更值得关注。

# vllm/v1/engine/core.py

POLLING_TIMEOUT_S = 2.5

class EngineCore:

"""Inner loop of vLLM's Engine."""

def __init__(

self,

vllm_config: VllmConfig,

executor_class: Type[Executor],

log_stats: bool,

):

assert vllm_config.model_config.runner_type != "pooling"

logger.info("Initializing a V1 LLM engine (v%s) with config: %s",

VLLM_VERSION, vllm_config)

self.log_stats = log_stats

# Setup Model.

self.model_executor = executor_class(vllm_config)

# Setup KV Caches and update CacheConfig after profiling.

num_gpu_blocks, num_cpu_blocks = self._initialize_kv_caches(

vllm_config)

vllm_config.cache_config.num_gpu_blocks = num_gpu_blocks

vllm_config.cache_config.num_cpu_blocks = num_cpu_blocks

# Setup scheduler.

self.scheduler = Scheduler(

scheduler_config=vllm_config.scheduler_config,

model_config=vllm_config.model_config,

cache_config=vllm_config.cache_config,

lora_config=vllm_config.lora_config,

speculative_config=vllm_config.speculative_config,

log_stats=self.log_stats,

)

# Setup MM Input Mapper.

self.mm_input_cache_server = MMInputCacheServer(

vllm_config.model_config)

# Setup batch queue for pipeline parallelism.

# Batch queue for scheduled batches. This enables us to asynchronously

# schedule and execute batches, and is required by pipeline parallelism

# to eliminate pipeline bubbles.

self.batch_queue_size = self.model_executor.max_concurrent_batches

self.batch_queue: Optional[queue.Queue[Tuple[Future[ModelRunnerOutput],

SchedulerOutput]]] = None

if self.batch_queue_size > 1:

logger.info("Batch queue is enabled with size %d",

self.batch_queue_size)

self.batch_queue = queue.Queue(self.batch_queue_size)

def add_request(self, request: EngineCoreRequest):

"""Add request to the scheduler."""

if request.mm_hashes is not None:

# Here, if hash exists for a multimodal input, then it will be

# fetched from the cache, else it will be added to the cache.

# Note that the cache here is mirrored with the client cache, so

# anything that has a hash must have a HIT cache entry here

# as well.

assert request.mm_inputs is not None

request.mm_inputs = self.mm_input_cache_server.get_and_update(

request.mm_inputs, request.mm_hashes)

req = Request.from_engine_core_request(request)

self.scheduler.add_request(req)

def abort_requests(self, request_ids: List[str]):

"""Abort requests from the scheduler."""

# TODO: The scheduler doesn't really need to know the

# specific finish reason, TBD whether we propagate that

# (i.e. client-aborted vs stop criteria met).

self.scheduler.finish_requests(request_ids,

RequestStatus.FINISHED_ABORTED)

def step(self) -> EngineCoreOutputs:

"""Schedule, execute, and make output."""

if not self.scheduler.has_unfinished_requests():

return EngineCoreOutputs(

outputs=[], scheduler_stats=self.scheduler.make_stats())

scheduler_output = self.scheduler.schedule()

output = self.model_executor.execute_model(scheduler_output)

engine_core_outputs = self.scheduler.update_from_output(

scheduler_output, output) # type: ignore

return engine_core_outputs

def shutdown(self):

self.model_executor.shutdown()

class EngineCoreProc(EngineCore):

"""ZMQ-wrapper for running EngineCore in background process."""

def __init__(

self,

input_path: str,

output_path: str,

ready_pipe: Connection,

vllm_config: VllmConfig,

executor_class: Type[Executor],

log_stats: bool,

):

super().__init__(vllm_config, executor_class, log_stats)

# Background Threads and Queues for IO. These enable us to

# overlap ZMQ socket IO with GPU since they release the GIL,

# and to overlap some serialization/deserialization with the

# model forward pass.

# Threads handle Socket <-> Queues and core_busy_loop uses Queue.

self.input_queue: queue.Queue[Tuple[EngineCoreRequestType,

Any]] = queue.Queue()

self.output_queue: queue.Queue[EngineCoreOutputs] = queue.Queue()

threading.Thread(target=self.process_input_socket,

args=(input_path, ),

daemon=True).start()

threading.Thread(target=self.process_output_socket,

args=(output_path, ),

daemon=True).start()

# Send Readiness signal to EngineClient.

ready_pipe.send({"status": "READY"})

@staticmethod

def run_engine_core(*args, **kwargs):

"""Launch EngineCore busy loop in background process."""

# Signal handler used for graceful termination.

# SystemExit exception is only raised once to allow this and worker

# processes to terminate without error

shutdown_requested = False

# Ensure we can serialize transformer config after spawning

maybe_register_config_serialize_by_value()

def signal_handler(signum, frame):

nonlocal shutdown_requested

if not shutdown_requested:

shutdown_requested = True

raise SystemExit()

# Either SIGTERM or SIGINT will terminate the engine_core

signal.signal(signal.SIGTERM, signal_handler)

signal.signal(signal.SIGINT, signal_handler)

parent_process = psutil.Process().parent()

engine_core = None

try:

engine_core = EngineCoreProc(*args, **kwargs)

engine_core.run_busy_loop()

except SystemExit:

logger.debug("EngineCore interrupted.")

except Exception:

traceback = get_exception_traceback()

logger.error("EngineCore hit an exception: %s", traceback)

parent_process.send_signal(signal.SIGUSR1)

finally:

if engine_core is not None:

engine_core.shutdown()

def run_busy_loop(self):

"""Core busy loop of the EngineCore."""

# 这里self.step_with_batch_queue我们先不看,它是为了消除流水线并行的气泡的

step_fn = (self.step

if self.batch_queue is None else self.step_with_batch_queue)

# Loop until process is sent a SIGINT or SIGTERM

while True:

# 1) Poll the input queue until there is work to do.

if not self.scheduler.has_unfinished_requests():

while True:

try:

req = self.input_queue.get(timeout=POLLING_TIMEOUT_S)

self._handle_client_request(*req)

break

except queue.Empty:

logger.debug("EngineCore busy loop waiting.")

# Break out the loop so we can log_stats in step().

if self.log_stats:

break

except BaseException:

raise

# 2) Handle any new client requests.

while not self.input_queue.empty():

req = self.input_queue.get_nowait()

self._handle_client_request(*req)

# 3) Step the engine core.

outputs = step_fn()

# 4) Put EngineCoreOutputs into the output queue.

if outputs is not None:

self.output_queue.put_nowait(outputs)

def _handle_client_request(self, request_type: EngineCoreRequestType,

request: Any) -> None:

"""Dispatch request from client."""

if request_type == EngineCoreRequestType.ADD:

self.add_request(request)

elif request_type == EngineCoreRequestType.ABORT:

self.abort_requests(request)

elif request_type == EngineCoreRequestType.UTILITY:

call_id, method_name, args = request

output = UtilityOutput(call_id)

try:

method = getattr(self, method_name)

output.result = method(

*self._convert_msgspec_args(method, args))

except BaseException as e:

logger.exception("Invocation of %s method failed", method_name)

output.failure_message = (f"Call to {method_name} method"

f" failed: {str(e)}")

self.output_queue.put_nowait(

EngineCoreOutputs(utility_output=output))

@staticmethod

def _convert_msgspec_args(method, args):

"""If a provided arg type doesn't match corresponding target method

arg type, try converting to msgspec object."""

if not args:

return args

arg_types = signature(method).parameters.values()

assert len(args) <= len(arg_types)

return tuple(

msgspec.convert(v, type=p.annotation) if isclass(p.annotation)

and issubclass(p.annotation, msgspec.Struct)

and not isinstance(v, p.annotation) else v

for v, p in zip(args, arg_types))

def process_input_socket(self, input_path: str):

"""Input socket IO thread."""

# Msgpack serialization decoding.

add_request_decoder = MsgpackDecoder(EngineCoreRequest)

generic_decoder = MsgpackDecoder()

with zmq_socket_ctx(input_path, zmq.constants.PULL) as socket:

while True:

# (RequestType, RequestData)

type_frame, data_frame = socket.recv_multipart(copy=False)

request_type = EngineCoreRequestType(bytes(type_frame.buffer))

# Deserialize the request data.

decoder = add_request_decoder if (

request_type

== EngineCoreRequestType.ADD) else generic_decoder

request = decoder.decode(data_frame.buffer)

# Push to input queue for core busy loop.

self.input_queue.put_nowait((request_type, request))

def process_output_socket(self, output_path: str):

"""Output socket IO thread."""

# Msgpack serialization encoding.

encoder = MsgpackEncoder()

# Reuse send buffer.

buffer = bytearray()

with zmq_socket_ctx(output_path, zmq.constants.PUSH) as socket:

while True:

outputs = self.output_queue.get()

encoder.encode_into(outputs, buffer)

socket.send_multipart((buffer, ), copy=False)

五、Scheduler

调度器很重要,因为它决定了推理系统能不能充分利用硬件的计算能力,提高系统的吞吐量。这里也是 V0 和 V1 版本的最大区别,所以我单独分了一个部分来讲解。调度流程主要有两步:分别是调度running队列和waiting队列。

在running队列中,依次取出请求,检查它所能被调度的最大token个数,然后看看kv_cache_manager能否为这个请求分配 KV cache 的空间,如果不能就从running队列的尾部抢占其它请求。抢占的具体做法是释放 KV cache 空间,然后将请求插入到waiting队列的头部,这样就保证了被抢占的请求比后来的、正在等待的请求优先被调度。如果抢占到自己这个请求,说明自己请求是不够分配 KV cache 的,就跳出循环。(对于多模态大模型的 encoder,它还会检查 encoder 的 cache 是否足够分配)

遍历过running队列后,如果没有请求被抢占,说明token_budget还没有用完,还能调度新请求,就开始遍历waiting队列,也是一样的逻辑,反复调度直到token_budget用完或没有新请求。这时就没有抢占操作了,因为waiting队列里的请求就是优先级最低的了,不能抢占已经在running队列里的请求。

这里先不讲KVCacheManager的底层实现,因为它现在封装得比较好,我们先把它当成一块硬盘用就行。我后面有时间再写个附录来分析这个复杂的组件。

# vllm/v1/core/scheduler.py

class Scheduler:

def __init__(

self,

scheduler_config: SchedulerConfig,

model_config: ModelConfig,

cache_config: CacheConfig,

lora_config: Optional[LoRAConfig],

speculative_config: Optional[SpeculativeConfig],

log_stats: bool,

) -> None:

self.scheduler_config = scheduler_config

self.cache_config = cache_config

self.lora_config = lora_config

self.speculative_config = speculative_config

self.log_stats = log_stats

# Scheduling constraints.

self.max_num_running_reqs = self.scheduler_config.max_num_seqs

self.max_num_scheduled_tokens = \

self.scheduler_config.max_num_batched_tokens

self.max_model_len = self.scheduler_config.max_model_len

num_gpu_blocks = cache_config.num_gpu_blocks

assert isinstance(num_gpu_blocks, int) and num_gpu_blocks > 0

# Create the KV cache manager.

self.kv_cache_manager = KVCacheManager(

block_size=self.cache_config.block_size,

num_gpu_blocks=num_gpu_blocks,

max_model_len=self.max_model_len,

sliding_window=self.cache_config.sliding_window,

enable_caching=self.cache_config.enable_prefix_caching,

log_stats=self.log_stats)

self.block_size = self.cache_config.block_size

# req_id -> Request

self.requests: Dict[str, Request] = {}

# Priority queues for requests.

self.waiting: Deque[Request] = deque()

self.running: List[Request] = []

# The requests that have been scheduled and are being executed

# by the executor.

self.scheduled_req_ids: Set[str] = set()

# The request IDs that are finished in between the previous and the

# current steps. This is used to notify the workers about the finished

# requests so that they can free the cached states for those requests.

# This is flushed at the end of each scheduling step.

self.finished_req_ids: Set[str] = set()

# OPTIMIZATION: Cache the CachedRequestData objects to avoid creating

# them at each scheduling step.

# Request id -> CachedRequestData

self._cached_reqs_data: Dict[str, CachedRequestData] = {}

# Encoder-related.

# Calculate encoder cache size if applicable

# NOTE: For now we use the same budget for both compute and space.

# This can be changed when we make encoder cache for embedding caching

# across requests.

encoder_compute_budget, encoder_cache_size = compute_encoder_budget(

model_config=model_config,

scheduler_config=scheduler_config,

)

# NOTE(woosuk): Here, "encoder" includes the vision encoder (and

# projector if needed). Currently, we assume that the encoder also

# has the Transformer architecture (e.g., ViT).

self.max_num_encoder_input_tokens = encoder_compute_budget

# NOTE: For the models without encoder (e.g., text-only models),

# the encoder cache will not be initialized because cache size is 0

# for these models.

self.encoder_cache_manager = EncoderCacheManager(

cache_size=encoder_cache_size)

def schedule(self) -> "SchedulerOutput":

# NOTE(woosuk) on the scheduling algorithm:

# There's no "decoding phase" nor "prefill phase" in the scheduler.

# Each request just has the num_computed_tokens and

# num_tokens_with_spec. num_tokens_with_spec =

# len(prompt_token_ids) + len(output_token_ids) + len(spec_token_ids).

# At each step, the scheduler tries to assign tokens to the requests

# so that each request's num_computed_tokens can catch up its

# num_tokens_with_spec. This is general enough to cover

# chunked prefills, prefix caching, speculative decoding,

# and the "jump decoding" optimization in the future.

scheduled_new_reqs: List[Request] = []

scheduled_resumed_reqs: List[Request] = []

scheduled_running_reqs: List[Request] = []

preempted_reqs: List[Request] = []

req_to_new_block_ids: Dict[str, List[int]] = {}

num_scheduled_tokens: Dict[str, int] = {}

token_budget = self.max_num_scheduled_tokens

# Encoder-related.

scheduled_encoder_inputs: Dict[str, List[int]] = {}

encoder_budget = self.max_num_encoder_input_tokens

# Spec decode-related.

scheduled_spec_decode_tokens: Dict[str, List[int]] = {}

# For logging.

scheduled_timestamp = time.monotonic()

# First, schedule the RUNNING requests.

req_index = 0

while req_index < len(self.running) and token_budget > 0:

request = self.running[req_index]

if request.request_id in self.scheduled_req_ids:

# This request has already been scheduled.

req_index += 1

continue

num_new_tokens = (request.num_tokens_with_spec -

request.num_computed_tokens)

num_new_tokens = min(num_new_tokens, token_budget)

assert num_new_tokens > 0

# Schedule encoder inputs.

encoder_inputs_to_schedule, num_new_tokens, new_encoder_budget = (

self._try_schedule_encoder_inputs(request,

request.num_computed_tokens,

num_new_tokens,

encoder_budget))

if num_new_tokens == 0:

# The request cannot be scheduled because the encoder budget

# or the encoder cache is exhausted.

# NOTE(woosuk): Here, by doing `continue` instead of `break`,

# we do not strictly follow the FCFS scheduling policy and

# allow the lower-priority requests to be scheduled.

req_index += 1

continue

while True:

new_blocks = self.kv_cache_manager.allocate_slots(

request, num_new_tokens)

if new_blocks is None:

# The request cannot be scheduled.

# Preempt the lowest-priority request.

preempted_req = self.running.pop()

self.kv_cache_manager.free(preempted_req)

preempted_req.status = RequestStatus.PREEMPTED

preempted_req.num_computed_tokens = 0

self.waiting.appendleft(preempted_req)

preempted_reqs.append(preempted_req)

if preempted_req == request:

# No more request to preempt.

can_schedule = False

break

else:

# The request can be scheduled.

can_schedule = True

break

if not can_schedule:

break

assert new_blocks is not None

# Schedule the request.

scheduled_running_reqs.append(request)

self.scheduled_req_ids.add(request.request_id)

req_to_new_block_ids[request.request_id] = [

b.block_id for b in new_blocks

]

num_scheduled_tokens[request.request_id] = num_new_tokens

token_budget -= num_new_tokens

req_index += 1

# Speculative decode related.

if request.spec_token_ids:

num_scheduled_spec_tokens = (num_new_tokens +

request.num_computed_tokens -

request.num_tokens)

if num_scheduled_spec_tokens > 0:

# Trim spec_token_ids list to num_scheduled_spec_tokens.

del request.spec_token_ids[num_scheduled_spec_tokens:]

scheduled_spec_decode_tokens[request.request_id] = (

request.spec_token_ids)

# Encoder-related.

if encoder_inputs_to_schedule:

scheduled_encoder_inputs[request.request_id] = (

encoder_inputs_to_schedule)

# Allocate the encoder cache.

for i in encoder_inputs_to_schedule:

self.encoder_cache_manager.allocate(request, i)

encoder_budget = new_encoder_budget

# Record the LoRAs in scheduled_running_reqs

requested_loras: Set[int] = set()

if self.lora_config:

requested_loras = set(

req.lora_request.lora_int_id for req in scheduled_running_reqs

if req.lora_request and req.lora_request.lora_int_id > 0)

assert len(requested_loras) <= self.lora_config.max_loras

# Next, schedule the WAITING requests.

if not preempted_reqs:

while self.waiting and token_budget > 0:

if len(self.running) == self.max_num_running_reqs:

break

request = self.waiting[0]

# Check that adding the request still respects the max_loras

# constraint.

if self.lora_config and request.lora_request:

req_lora_id = request.lora_request.lora_int_id

if len(requested_loras) == self.lora_config.max_loras and (

req_lora_id not in requested_loras):

# Cannot schedule.

# TODO (varun): This means all the other requests in

# the WAITING queue will be blocked by this request,

# even if,

# 1. these other requests do not use LoRA, or,

# 2. these other requests use the already requested

# LoRAs.

# This is too conservative and could be optimized.

break

# Get already-cached tokens.

computed_blocks, num_computed_tokens = \

self.kv_cache_manager.get_computed_blocks(request)

# Number of tokens to be scheduled.

# We use `request.num_tokens` instead of

# `request.num_prompt_tokens` to consider the resumed requests,

# which have output tokens.

num_new_tokens = request.num_tokens - num_computed_tokens

if num_new_tokens == 0:

# This happens when prompt length is divisible by the block

# size and all blocks are cached. Now we force to recompute

# the last block. Note that we have to re-compute an entire

# block because allocate_slots() assumes num_computed_tokens

# is always a multiple of the block size. This limitation

# can potentially be removed in the future to slightly

# improve the performance.

num_computed_tokens -= self.block_size

num_new_tokens = self.block_size

computed_blocks.pop()

num_new_tokens = min(num_new_tokens, token_budget)

assert num_new_tokens > 0

# Schedule encoder inputs.

(encoder_inputs_to_schedule, num_new_tokens,

new_encoder_budget) = self._try_schedule_encoder_inputs(

request, num_computed_tokens, num_new_tokens,

encoder_budget)

if num_new_tokens == 0:

# The request cannot be scheduled.

break

new_blocks = self.kv_cache_manager.allocate_slots(

request, num_new_tokens, computed_blocks)

if new_blocks is None:

# The request cannot be scheduled.

break

self.waiting.popleft()

self.running.append(request)

self.scheduled_req_ids.add(request.request_id)

if request.status == RequestStatus.WAITING:

scheduled_new_reqs.append(request)

self.request_scheduled(request, scheduled_timestamp)

elif request.status == RequestStatus.PREEMPTED:

scheduled_resumed_reqs.append(request)

else:

raise RuntimeError(

f"Invalid request status: {request.status}")

if self.lora_config and request.lora_request:

requested_loras.add(request.lora_request.lora_int_id)

req_to_new_block_ids[request.request_id] = [

b.block_id for b in computed_blocks + new_blocks

]

num_scheduled_tokens[request.request_id] = num_new_tokens

token_budget -= num_new_tokens

request.status = RequestStatus.RUNNING

request.num_computed_tokens = num_computed_tokens

# Encoder-related.

if encoder_inputs_to_schedule:

scheduled_encoder_inputs[request.request_id] = (

encoder_inputs_to_schedule)

# Allocate the encoder cache.

for i in encoder_inputs_to_schedule:

self.encoder_cache_manager.allocate(request, i)

encoder_budget = new_encoder_budget

# Check if the scheduling constraints are satisfied.

total_num_scheduled_tokens = sum(num_scheduled_tokens.values())

assert total_num_scheduled_tokens <= self.max_num_scheduled_tokens

assert token_budget >= 0

assert len(self.running) <= self.max_num_running_reqs

# Since some requests in the RUNNING queue may not be scheduled in

# this step, the total number of scheduled requests can be smaller than

# len(self.running).

assert (len(scheduled_new_reqs) + len(scheduled_resumed_reqs) +

len(scheduled_running_reqs) <= len(self.running))

# Get the longest common prefix among all requests in the running queue.

# This can be potentially used for cascade attention.

num_common_prefix_blocks = 0

if self.running:

any_request = self.running[0]

num_common_prefix_blocks = (

self.kv_cache_manager.get_num_common_prefix_blocks(

any_request, len(self.running)))

# Construct the scheduler output.

new_reqs_data = [

NewRequestData.from_request(req,

req_to_new_block_ids[req.request_id])

for req in scheduled_new_reqs

]

resumed_reqs_data = [

self._make_cached_request_data(

req,

num_scheduled_tokens[req.request_id],

len(scheduled_spec_decode_tokens.get(req.request_id, ())),

req_to_new_block_ids[req.request_id],

resumed_from_preemption=True,

) for req in scheduled_resumed_reqs

]

running_reqs_data = [

self._make_cached_request_data(

req,

num_scheduled_tokens[req.request_id],

len(scheduled_spec_decode_tokens.get(req.request_id, ())),

req_to_new_block_ids[req.request_id],

resumed_from_preemption=False,

) for req in scheduled_running_reqs

]

scheduler_output = SchedulerOutput(

scheduled_new_reqs=new_reqs_data,

scheduled_cached_reqs=resumed_reqs_data + running_reqs_data,

num_scheduled_tokens=num_scheduled_tokens,

total_num_scheduled_tokens=total_num_scheduled_tokens,

scheduled_spec_decode_tokens=scheduled_spec_decode_tokens,

scheduled_encoder_inputs=scheduled_encoder_inputs,

num_common_prefix_blocks=num_common_prefix_blocks,

# finished_req_ids is an existing state in the scheduler,

# instead of being newly scheduled in this step.

# It contains the request IDs that are finished in between

# the previous and the current steps.

finished_req_ids=self.finished_req_ids,

free_encoder_input_ids=self.encoder_cache_manager.get_freed_ids(),

)

self.finished_req_ids = set()

return scheduler_output

def _make_cached_request_data(

self,

request: Request,

num_scheduled_tokens: int,

num_scheduled_spec_tokens: int,

new_block_ids: List[int],

resumed_from_preemption: bool,

) -> "CachedRequestData":

# OPTIMIZATION: Cache the CachedRequestData objects to avoid creating

# them at each scheduling step.

num_computed_tokens = request.num_computed_tokens

num_regular_tokens = num_scheduled_tokens - num_scheduled_spec_tokens

new_token_ids = request.all_token_ids[

num_computed_tokens:num_computed_tokens + num_regular_tokens]

req_data = self._cached_reqs_data.get(request.request_id)

if req_data is not None:

req_data.resumed_from_preemption = resumed_from_preemption

req_data.new_token_ids = new_token_ids

req_data.new_block_ids = new_block_ids

req_data.num_computed_tokens = num_computed_tokens

else:

req_data = CachedRequestData.from_request(request,

resumed_from_preemption,

new_token_ids,

new_block_ids)

self._cached_reqs_data[request.request_id] = req_data

return req_data

六、OutputProcessor

经历了这么多复杂的逻辑,终于到了最后的输出。可以看到OutputProcessor就是用request_states来管理各请求的状态。因为LLMEngine和EngineCore在两个进程里,LLMEngine就用OutputProcessor记录请求状态、并在输出时加上这些状态信息。OutputProcessor主要就是做 detokenize 和计算状态、打包状态信息。

# vllm/v1/engine/output_processor.py

class OutputProcessor:

"""Process EngineCoreOutputs into RequestOutputs."""

def __init__(

self,

tokenizer: BaseTokenizerGroup,

log_stats: bool,

):

self.log_stats = log_stats

self.tokenizer = tokenizer

self.request_states: Dict[str, RequestState] = {}

def abort_requests(

self,

request_ids: List[str],

) -> None:

for request_id in request_ids:

self.request_states.pop(request_id, None)

def add_request(

self,

request: EngineCoreRequest,

queue: Optional[asyncio.Queue[RequestOutput]] = None,

) -> None:

request_id = request.request_id

if request_id in self.request_states:

raise ValueError(f"Request id {request_id} already running.")

self.request_states[request_id] = RequestState.from_new_request(

tokenizer=self.tokenizer.get_lora_tokenizer(request.lora_request),

request=request,

queue=queue,

log_stats=self.log_stats)

def process_outputs(

self,

engine_core_outputs: List[EngineCoreOutput],

engine_core_timestamp: Optional[float] = None,

iteration_stats: Optional[IterationStats] = None,

) -> OutputProcessorOutput:

"""

Process the EngineCoreOutputs:

1) Compute stats for logging

2) Detokenize

3) Create and handle RequestOutput objects:

* If there is a queue (for usage with AsyncLLM),

put the RequestOutput objects into the queue for

handling by the per-request generate() tasks.

* If there is no queue (for usage with LLMEngine),

return a list of RequestOutput objects.

****************** NOTE FOR DEVELOPERS ******************

VLLM V1 minimizes the number of python loops over the full

batch to ensure system overheads are minimized. This is the

only function that should loop over EngineCoreOutputs.

If you need to touch every element of the batch, do it from

within the loop below.

**********************************************************

"""

request_outputs: List[RequestOutput] = []

reqs_to_abort: List[str] = []

for engine_core_output in engine_core_outputs:

req_id = engine_core_output.request_id

req_state = self.request_states.get(req_id)

if req_state is None:

# Ignore output for already-aborted request.

continue

# 1) Compute stats for this iteration.

self._update_stats_from_output(req_state, engine_core_output,

engine_core_timestamp,

iteration_stats)

new_token_ids = engine_core_output.new_token_ids

finish_reason = engine_core_output.finish_reason

stop_reason = engine_core_output.stop_reason

# TODO(andy): prompt logprobs + chunked prefill can

# result in engine core returning an output for a

# partial prefill (in order to send back partial

# prompt logprobs.) This breaks the invariant that

# process_outputs is only operating on engine core

# outputs associated with non-partial completions.

# Currently this is handled by having `is_prefilling`

# check for new decoded tokens, indicating that

# the completion is not partial.

#

# Follow up will aggregate partial prompt logprobs

# in the EngineCore.

req_state.is_prefilling = not new_token_ids

# 2) Detokenize the token ids into text and check for stop

# strings.

stop_string = req_state.detokenizer.update(new_token_ids)

if stop_string and finish_reason != FinishReason.STOP:

finish_reason = FinishReason.STOP

stop_reason = stop_string

# 3) Compute sample and prompt logprobs for request,

# if required.

req_state.logprobs_processor.update_from_output(engine_core_output)

# 4) Create and handle RequestOutput objects.

if request_output := self._make_request_output(

req_state, new_token_ids, finish_reason, stop_reason):

if req_state.queue is not None:

# AsyncLLM: put into queue for handling by generate().

req_state.queue.put_nowait(request_output)

else:

# LLMEngine: return list of RequestOutputs.

request_outputs.append(request_output)

# Free completed requests.

if request_output.finished:

self.request_states.pop(req_id)

if not engine_core_output.finished:

# If req not finished in EngineCore, but Detokenizer

# detected stop string, abort needed in EngineCore.

reqs_to_abort.append(req_id)

# Track per-request stats

self._update_stats_from_finished(req_state, request_output,

finish_reason,

iteration_stats)

return OutputProcessorOutput(

request_outputs=request_outputs,

reqs_to_abort=reqs_to_abort,

)

解剖一个麻雀

在Linux刚发布时,代码量也是差不多一万行。后来的故事我们都知道,这个系统的代码量逐渐膨胀,如今已经超过4000万行。现在读它的源码来学习Linux操作系统已经不太现实,但我们读它最初版本的代码还是能管中窥豹。

我写这篇博客的目的就是解剖一个麻雀。麻雀虽小,五脏俱全。虽然现在的 vLLM 还很小,不支持很多功能,但它是一个很好的学习材料。未来几十年,它也会以很快的速度发展、代码量膨胀。二十年、三十年以后再回来看这篇博客,希望它仍然是一个很好的大模型推理系统教程。

转载请标明出处